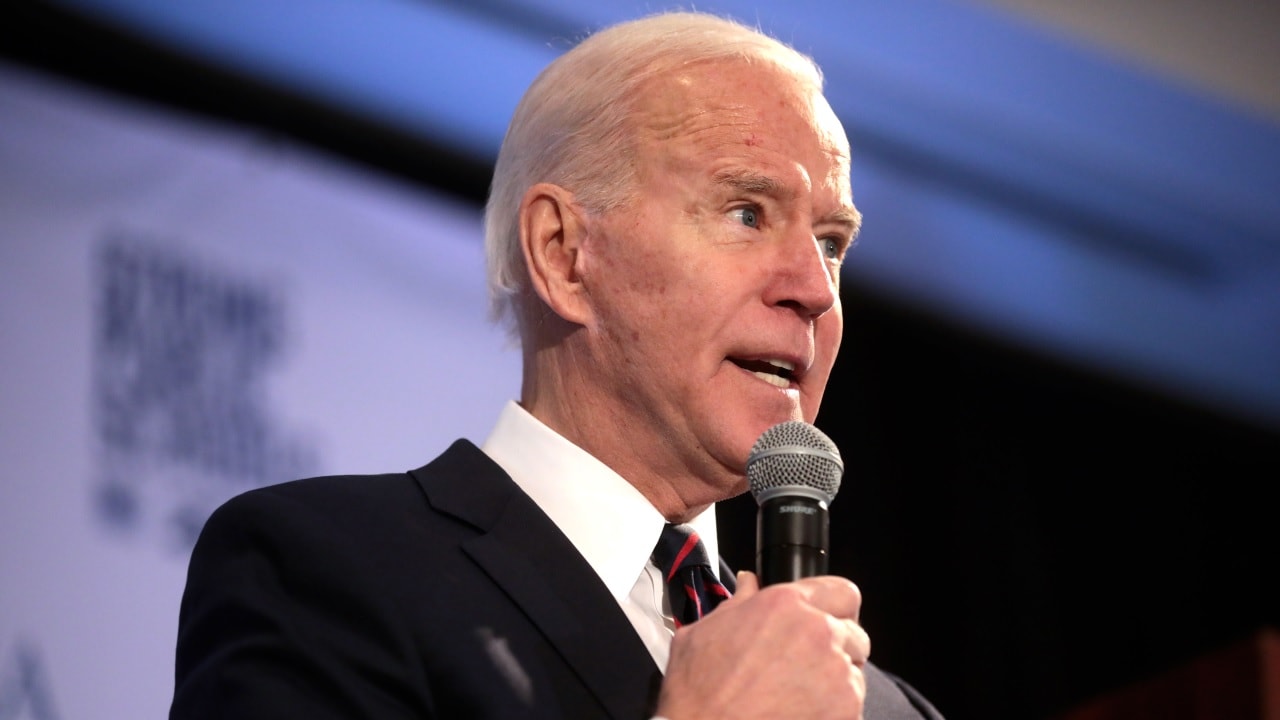

President Joe Biden signed an executive order Monday moving to regulate and ensure the responsible use of Artificial Intelligence. During a press conference, Biden characterized it as the most significant action any government has taken to address the safe deployment of AI.

The Rapid Pace of Technological Change

Biden acknowledged the rapid pace of technological change, predicting more advancements in the next five to ten years than in the previous five decades. He stressed that while AI has the potential to improve lives, it can also pose risks, making it imperative to adopt a proactive approach.

Vice President Kamala Harris, the U.S. representative at a global AI safety summit in the UK, underlined the moral, ethical, and societal duty of governments to ensure AI’s safe and beneficial development. Harris emphasized the belief that the United States is a global leader in AI and said that the executive order should serve as an international model.

Key Provisions of the Executive Order

While Biden deemed the executive order a “bold action,” he emphasized the necessity for Congress to act swiftly to guarantee the secure deployment and advancement of AI.

Under the order, tech companies will be required to share test results for their AI systems with the U.S. government before releasing them. Additionally, stringent testing guidelines will be established. The order outlines several key AI directives, including:

-National Security: Companies developing AI models that pose threats to national security, economic security, or public health and safety must share their safety test results with the government.

– Red-Team Testing: Guidelines for red-team testing, where assessors emulate rogue actors, will be established.

– AI-Made Content Watermarking: Official guidance on watermarking AI-generated content will address the risk of harm from fraud and deepfakes.

– Biological Synthesis Screening: New standards for screening biological synthesis will be developed to mitigate the potential threat of AI systems contributing to the creation of bioweapons.

The executive order’s urgency was underscored by White House Chief of Staff Jeff Zients, who emphasized the need to move as fast as technology itself.

Responsible AI

The executive order encompasses various AI-related issues, including privacy, civil rights, and consumer protections. Civil liberty and digital rights groups generally praised the order as a positive initial step toward responsible AI development and governance.

However, the effectiveness of the directives remains contingent on their enforcement. Alexandra Reeve Givens, CEO of the Center for Democracy and Technology, highlighted the need for detailed and actionable guidance to ensure the intended effects.

Privacy Protections and Surveillance Concerns

The order includes privacy protections, crucial in the absence of federal privacy legislation in the U.S. Caitriona Fitzgerald, Deputy Director of the Electronic Privacy Information Center, noted that the order is a significant step in establishing fairness, accountability, and transparency in the use of AI.

On the other hand, groups focused on surveillance, such as the Surveillance Tech Oversight Project, voiced concerns. They argue that AI auditing techniques could be manipulated, and some AI applications, like facial recognition, require outright bans rather than guidelines.

The executive order outlines a timeline for implementation, with safety and security items facing the earliest deadlines, ranging from 90 to 365 days. The order also directs the U.S. military and intelligence community on the safe and ethical use of AI.

International Cooperation

The White House pledged to accelerate the development of AI standards in collaboration with international partners. The G7 group of nations also published a code of conduct on Monday, emphasizing the responsible development of AI, testing models externally, and addressing global challenges such as climate and health problems.

The executive order reflects the United States’ commitment to harness AI’s benefits while ensuring public safety, privacy, and ethical use. It is seen as a pivotal step in shaping the future of AI technology and its global governance.

Georgia Gilholy is a journalist based in the United Kingdom who has been published in Newsweek, The Times of Israel, and the Spectator. Gilholy writes about international politics, culture, and education.